How do you differ from other platforms such as NotebookLM, or just plain LLM models?

That's an excellent question! We are not an answers chatbot—we prepare your corpus so any LLM you choose answers better.

RAG stands for Retrieval-Augmented Generation. It is a way for AI to find real information and use it to give better answers.

There are many ways to accomplish this.

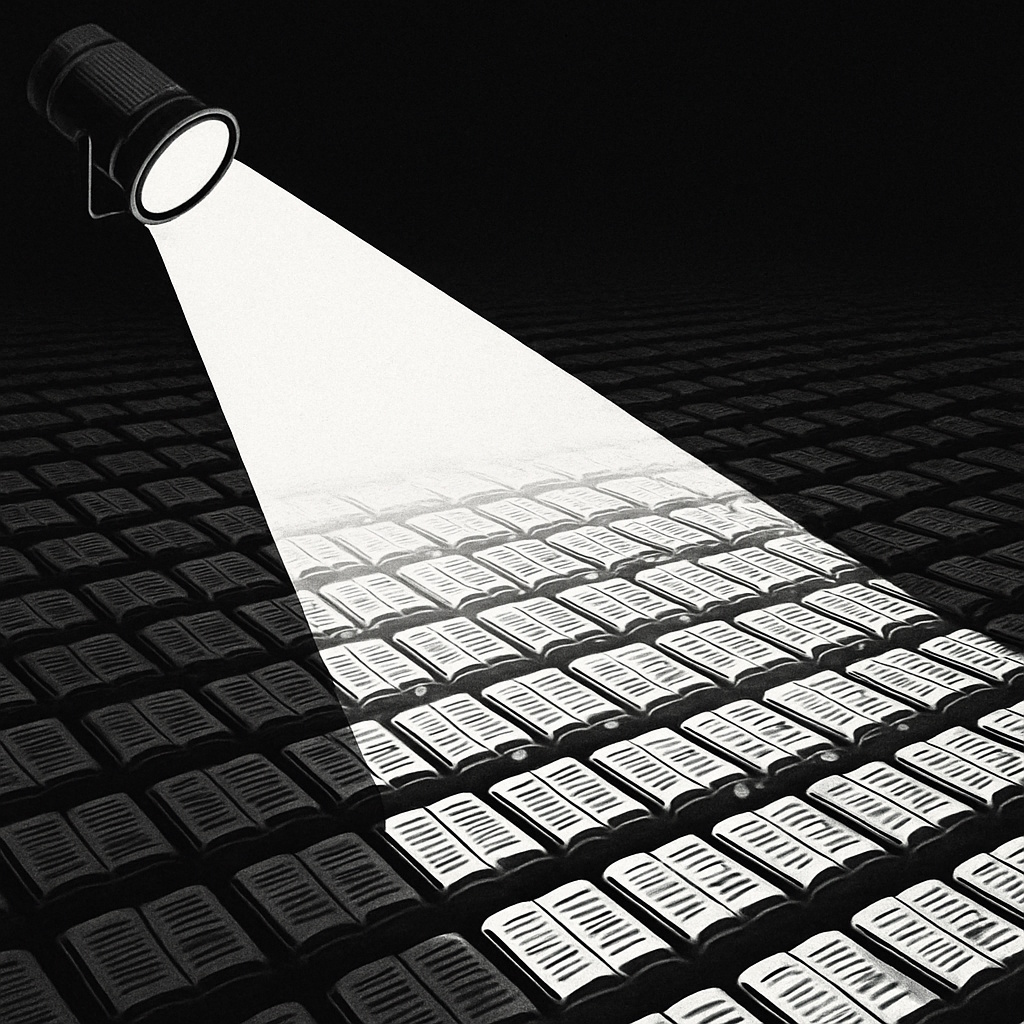

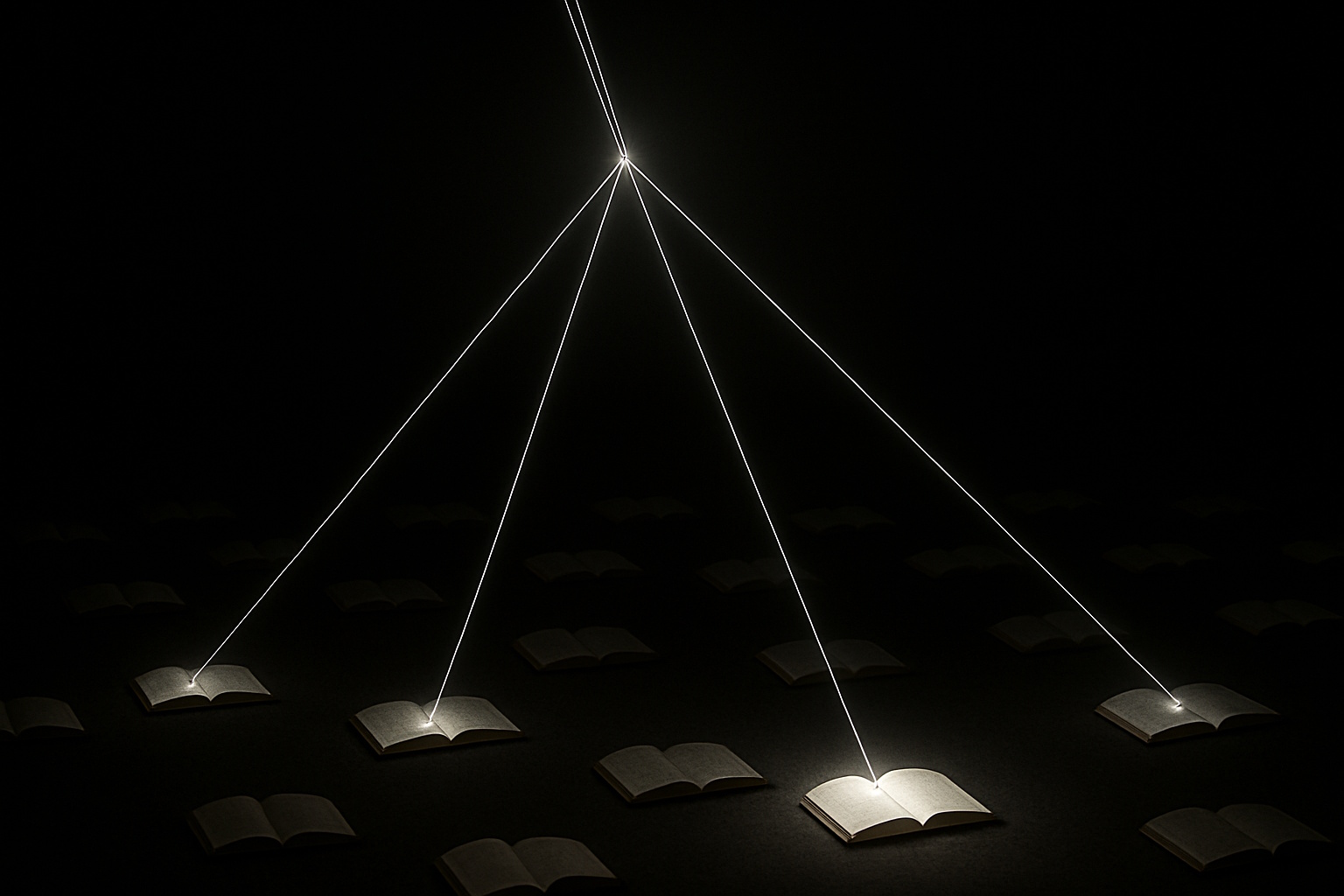

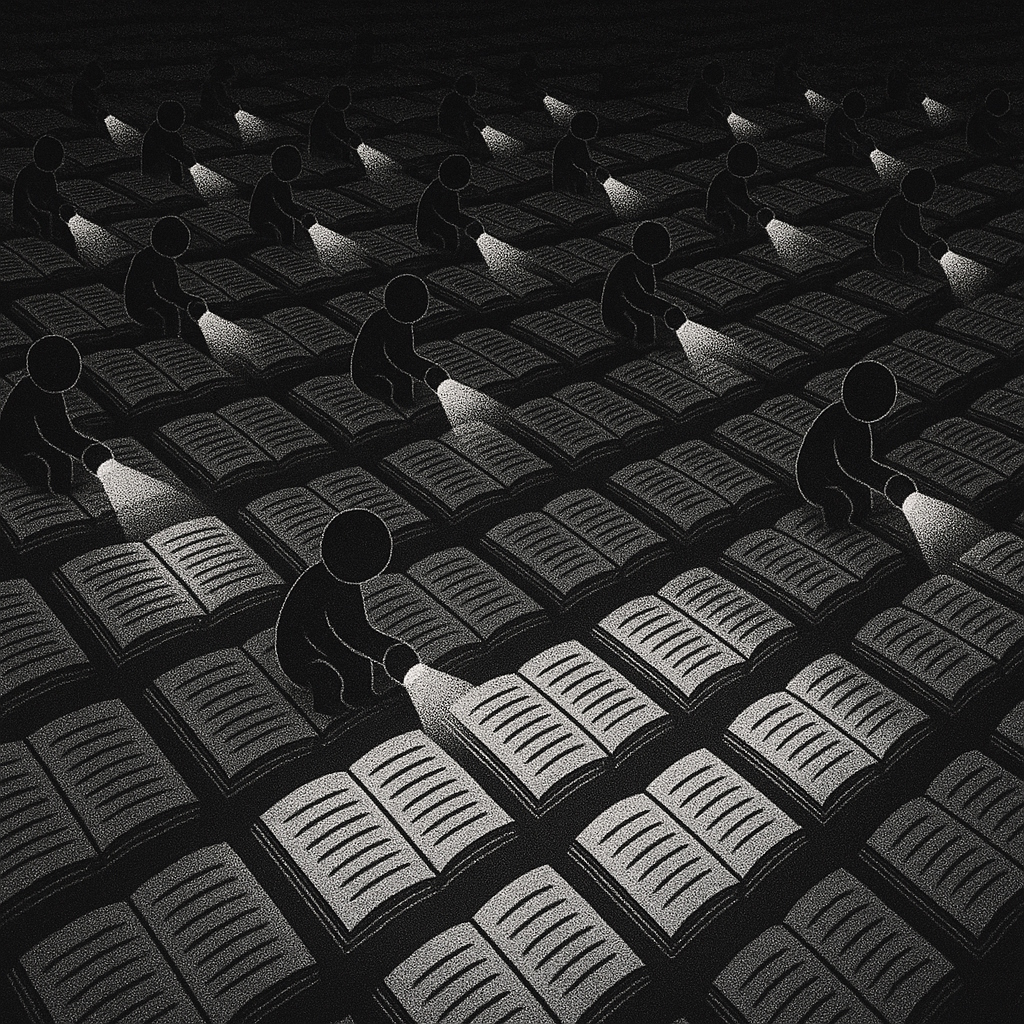

The most prominent difference is a technical one. In simple terms, if information was a huge field of books laying in the dark: